The promise of "perfect sound forever," successfully foisted on an unwitting public by the Compact Disc's promoters, at first seemed to put an end to the audiophile's inexorable need to tweak a playback system's front end at the point of information retrieval. Several factors contributed to the demise of tweaking during the period when CD players began replacing turntables as the primary front-end signal source. First, the binary nature (ones and zeros) of digital audio would apparently preclude variations in playback sound quality due to imperfections in the recording medium. Second, if CD's sound was indeed "perfect," how could digital tweaking improve on perfection? Finally, CD players and discs presented an enigma to audiophiles accustomed to the more easily understood concept of a stylus wiggling in a phonograph groove. These conditions created a climate in which it was assumed that nothing in the optical and mechanical systems of a CD player could affect digital playback's musicality.

Recently, however, there has been a veritable explosion of interest in all manner of CD tweaks, opening a digital Pandora's box. An avalanche of CD tweak products (and the audiophile's embrace of them) has suddenly appeared in the past few months, Monster Cable's, AudioQuest's, and Euphonic Technology's CD Soundrings notwithstanding. Most of these tweaks would appear to border on voodoo, with no basis in scientific fact. Green marking pens, an automobile interior protectant, and an "optical impedance matching" fluid are just some of the products touted as producing musical nirvana. The popular media has even picked up on this phenomenon, sparked by Sam Tellig's Audio Anarchist column in Vol.13 No.2 describing the sonic benefits of applying Armor All, the automobile treatment, to a CD's surface. Print articles have appeared in the Los Angeles Times, Ice Magazine, and on television stations MTV, VH-1, and CNN, all reporting, with varying degrees of incredulity, the CD tweaking phenomenon.

The intensity of my interest in the subject was heightened by a product called "CD Stoplight," marketed by AudioPrism. CD Stoplight is a green paint applied to the outside edge of a CD (not the disc surface, but the 1.2mm disc thickness) that reportedly improves sound quality. I could not in my wildest imagination see how green paint on the disc edge could change, for better or worse, a CD's sound. However, trusting my ears as the definitive test, I compared treated to untreated discs and was flabbergasted. Soundstage depth increased, mids and highs were smoother with less grain, and the presentation became more musically involving.

Other listeners, to a person, have had similar impressions. Since I am somewhat familiar with the mechanisms by which data are retrieved from a CD (I worked in CD mastering for three years before joining Stereophile), this was perplexing: I could think of no plausible explanation for a difference in sonic quality. As we shall see, the light reflected from a CD striking the photo-detector contains all the information encoded on the disc (footnote 1). Even if CD Stoplight could somehow affect the light striking the photo-detector, how could this change make the soundstage deeper? I was simultaneously disturbed and encouraged by this experience. Disturbed because it illustrates our fundamental lack of understanding of digital audio's mysteries, and encouraged by the promise that identification of previously unexplored phenomena could improve digital audio to the point where today's digital audio era will be regarded as the stone age.

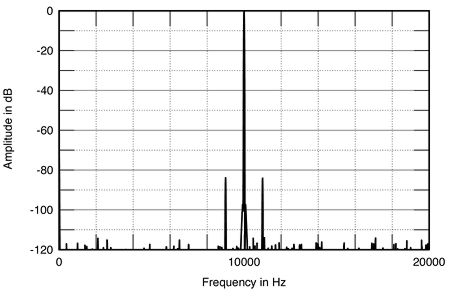

These events prompted me to conduct a scientific examination of several CD "sonic cure-all" devices and treatments. I wanted to find an objective, measurable phenomenon that explains the undeniable musical differences heard by many listeners where, at least according to established digital audio theory, no differences should exist. For this inquiry, I measured several digital-domain performance criteria on untreated CDs, and then on the same CDs treated with various CD tweaks. The parameters measured include data error rates, ability to correct (rather than conceal) data errors, and jitter.

The six CD treatments and devices chosen for this experiment include three that allegedly affect optical phenomena and three that ostensibly affect the CD player's mechanical performance. The three optical treatments tested are CD Stoplight (the green paint), Finyl (a liquid applied to a disc surface, that, according to its promoters, provides "optical impedance matching"), and Armor All. The mechanical devices include CD Soundrings, The Mod Squad's CD Damper disc, and the Arcici LaserBase, a vibration-absorbing CD-player platform. I also measured playback signal jitter in a mid-priced CD player and the $4000 Esoteric P2 transport (regarded as having superb sonics). However, this is not intended as a survey of the musical benefits of these devices and treatments. In addition, I looked at the variation in quality of discs made at various CD manufacturing facilities around the world.

Another purpose of the article is to dispel some common misconceptions about CD error correction and its effect on sonic quality. If one believes the promoters of some of these CD treatments, errors are the single biggest source of sonic degradation in digital audio. In reality, errors are the least of CD's problems. However, this has not prevented marketeers from exploiting the audiophile's errorphobia in an attempt to sell products.

For example, Digital Systems and Solutions, Inc., manufacturer of Finyl, claim in their white paper that error concealment "results in a serious degrading of playback fidelity." They also state that errors can get through undetected, leading to a litany of sonic horrors including: "poor articulation of bass and mid-bass notes, attenuation of dynamics and smearing of transients, increased noise with loss of inner detail and intertransient silence, reduced midrange presence that diminishes clarity and transparency, loss of image specificity and focus, reduction of the apparent width and depth of soundstage—virtually eliminating the possibility of holophonic [sic] imagery, decreased resolution of the low level detail that is so necessary to the recovery of hall ambience, altered instrumental and vocal timbres that lack coherence or cohesiveness, obscuring of vocal textures and expression, instrumental lines and musical themes are more difficult to sort out, complex rhythms and tempos are less easily followed, the music will not be as emotionally involving and satisfying an experience as might have otherwise been possible, subtle breath effects on brass or wind instruments are more difficult to discern as are nuances of fingering and bowing on string instruments." This list, they concede, "is not claimed to be complete."

Technical background

Encoding and data retrieval: Before getting into the measurement results, let's arm ourselves with a little technical background on how the CD works.

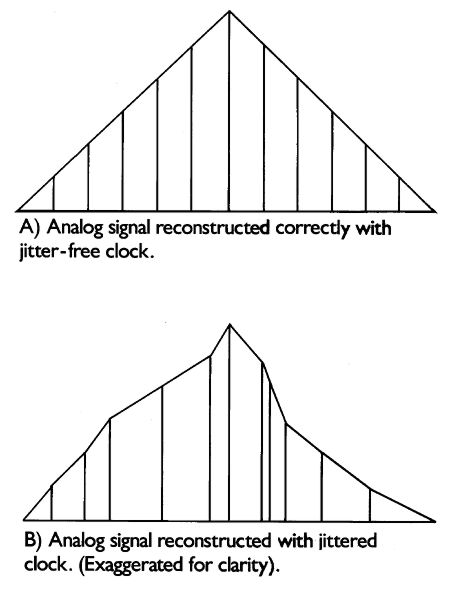

A CD's surface is covered by a single spiral track of alternating "pit" and "land" formations. These structures, which encode binary data, are created during the laser mastering process. The CD master disc is a glass substrate coated with a very thin layer of photosensitive material. The glass master is rotated on a turntable while exposed to a laser beam that is modulated (turned on and off) by the digital data we wish to record on the disc. This creates a spiral of exposed and unexposed areas of the disc. When the master is later put under a chemical developing solution, areas of the photosensitive material exposed to the recording laser beam are etched away, creating a pit. Unexposed areas are unaffected by the developing solution and are called lands. These formations, which are among the smallest manufactured structures, are transferred through the manufacturing process to mass-produced discs. Fig.1 is a scanning electron microscope of a CD surface. Note that a human hair is about the width of 50 tracks.

Fig.1 Scanning electron microscope of a CD surface.

The playback laser beam in the CD player is focused on these tiny pits and held on track by a servo system as the disc rotates. This beam is reflected from the disc to a photo-detector, a device that converts light into voltage. To distinguish between pit and land areas, the pit depth is one-quarter the wavelength of the playback laser beam. When laser light strikes a pit, a portion of the beam is reflected from the surrounding land, while some light is reflected from the pit bottom. Since the portion of light reflected from the pit bottom must travel a longer distance (1/4 wavelength down plus 1/4 wavelength back up), this portion of the beam is delayed by half a wavelength in relation to the beam reflected by the land. When these two beams combine, phase cancellation occurs, resulting in decreased output from the photo-detector. This variable-intensity beam thus contains all the information encoded on the disc.

Now that we understand how the playback beam/photo-detector can distinguish between pit and land, let's look at how these distinctions represent digital audio data. One may intuitively think that it would be logical for a pit to represent binary one and a land to represent binary zero, or vice versa. This method would certainly work, but a much more sophisticated scheme has been devised that is fundamental to the CD. It is called Eight-to-Fourteen Modulation, or EFM.

This encoding system elegantly solves a variety of data-retrieval functions. In EFM encoding, pit and land do not represent binary data directly. Instead, transitions from pit-to-land or land-to-pit represent binary one, while all other surfaces (land or pit bottom) represent binary zero. EFM encoding takes symbols of 8 bits and converts them into unique 14-bit words, creating a pattern in which binary ones are separated by a minimum of two zeros and a maximum of 10 zeros. The bit stream is thus given a specific pattern of ones and zeros that result in nine discrete pit or land lengths on the disc. The shortest pit or land length encodes three bits, while the longest encodes 11 bits. The blocks of 14 bits are linked by three "merging bits," resulting in an encoding ratio of 17:8. At first glance, it may seem odd that EFM encoding, in more than doubling the number of bits to be stored, can actually increase data density. But just this occurs: Storage density is increased by 25% over unmodulated encoding.

EFM has other inherent advantages. By inserting zeros between successive ones, the bandwidth of the signal reflected from the disc is decreased. The data rate from a CD is 4.3218 million bits per second (footnote 2), but the EFM signal has a bandwidth of only 720kHz. In addition, the EFM signal serves as a clock that, among other functions, controls the player's rotational servo.

The signal reflected from the disc is comprised of nine discrete frequencies, corresponding to the nine discrete pit or land lengths (footnote 3). The highest-frequency component, called "I3," is produced by the shortest pit or land length and has a frequency of 720kHz. This represents binary data 100. The lowest-frequency component, called "I11," is produced by the longest pit or land length and has a frequency of 193kHz. This represents binary data 10000000000. The signal reflected from the disc, produced by EFM encoding, is often called the HF (high frequency) signal. The varying periods of the sinewaves correspond to the periods of time required to read the various pit lengths.

At first impression, the HF signal appears to be analog, not one that carries digital data. However, the zero crossings of the waveforms contain the digital information encoded on the disc. Fig.2 shows the relationship between binary data, pit structure, and the recovered HF signal.

Fig.2 Relationship between binary data, pit structure, and the HF signal. (Reproduced from Principles of Digital Audio, Second Edition (1989), by Kenneth C. Pohlmann, with the permission of the publisher, Howard W. Sams & Company.)

HF signal quality is a direct function of pit shape, which in turn is affected by many factors during the CD manufacturing process. There is a direct correlation between error rates and pit shape. Poorly shaped pits result in a low-amplitude HF signal with poorly defined lines. Figs.3 and 4 show an excellent HF signal and a poor HF signal respectively.

Fig.3 A clean HF signal results from well-spaced pits.

Fig.4 A poor-quality HF signal.

CD data errors: Any digital storage medium is prone to data errors, and the CD is no exception. An error occurs when a binary one is mistakenly read as a binary zero (or vice versa), or when the data flow is momentarily interrupted. The latter, more common in CDs, is caused by manufacturing defects, surface scratches, and dirt or other foreign particles on the disc. Fortunately, the CD format incorporates extremely powerful error detection and correction codes that can completely correct a burst error of up to 4000 successive bits. The reconstructed data are identical to what was missing. This is called error correction. If the data loss exceeds the player's ability to correctly replace missing data, the player makes a best-guess estimate of the missing data and inserts this approximation into the data stream. This is called error concealment, or interpolation.

It is important to make the distinction between correction and concealment: correction is perfect and inaudible, while concealment has the potential for a momentary sonic degradation where the interpolation occurs.

A good general indication of disc quality (and the claimed error-reduction effects of some CD tweaks) is the Block Error Rate, or BLER. BLER is the number of blocks per second that contain errant data, before error correction. The raw data stream from a CD (called "channel bits") contains 7350 blocks per second, with a maximum allowable BLER (as specified by Philips) of 220. A disc with a BLER of 100 thus has 100 blocks out of 7350 with errant or missing data. In these experiments, Block Error Rate is the primary indicator of a particular tweak's effect on error-rate performance.

In addition to measuring the effects of CD tweaks on BLER, I explored their potential to reduce interpolations. To do this, I used the Pierre Verany test CD that has intentional dropouts in the spiral track. The disc has a sequence of tracks with increasingly long periods of missing data.

First, I found the track that was just above the threshold of producing an uncorrectable error (called an "E23 error") as analyzed by the Design Science CD Analyzer (see Sidebar). The track was played repeatedly to assure consistency, thus avoiding the ascription to chance of any subsequent change. Then, the same track was played and analyzed, this time after the addition of a CD treatment or device. This twofold approach—measuring a tweak's effect on both BLER and interpolations—would seem to cover the gamut of error-reduction potential.

There are two general misconceptions about CD errors and sound quality: 1) errors are the primary source of sonic degradation; and 2) if there are no uncorrectable errors, there can be no difference in sound.

The first conclusion is largely due to the marketing programs of CD-accessory manufacturers who claim their products reduce error rates. Many of the devices tested claim to improve sound quality by reducing the amount of error concealment performed by the CD player. In fact, interpolations (error concealment) rarely occur. In the unlikely event that concealment is performed, it will be momentary and thus have no effect on the overall sound. At worst, a transient tick or glitch would be audible.

To better understand the nature of data errors, a look at CD Read-Only Memory (CD-ROM) is useful. A CD-ROM is manufactured just like an audio CD, but contains computer data (text, graphics, application software, etc.) instead of music. The data retrieved from a CD-ROM must be absolutely accurate to the bit level, after error correction. If even a single wrong bit gets past the error correction, the entire program could crash. The errant bit may be within instructions for the host computer's microprocessor, causing the whole application to come to an instant halt, making the disc useless.

To prevent this, a quality-control procedure is routinely used at the mastering and pressing facility to assure 100% error-free performance. Samples of the finished CD-ROM are compared, bit for bit, to the original source data. For high-reliability applications, each replicated disc undergoes this process. This rigorous testing reveals much about the error-correction ability of the CD's Cross Interleaved Reed-Solomon encoding (CIRC). Throughout dozens of hours of this verification procedure, I cannot remember even a single instance of one wrong bit getting through.

It could be argued that CD-ROM has additional error-correction ability not found on CD audio discs. This is true, but the additional layer of error correction is almost never invoked. Furthermore, in all the hours of error-rate measuring for this project, I never encountered an E23 error, the first and most sensitive indication of an interpolation (except on the Pierre Verany disc, which has intentional errors). In fact, I saw only one E22 error, the last stage of correction before concealment. In retesting the disc, the E22 error disappeared, indicating it was probably due to a piece of dirt on the disc. Finally, the unlikely occurrence of an uncorrectable error is exemplified by the warning system in the Design Science CD Analyzer. The system beeps and changes the computer's display color to red to alert the operator if even an E22 error (fully corrected) is detected.

http://www.stereophile.com/reference/590jitter/